Quick Sort

AfterAcademy Tech

•

10 Aug 2020

Difficulty: Hard

Asked in: Microsoft, Google, Goldman Sachs

Sorting is a process of arranging items systematically. There are several ways to sort a list of items, you may read about some of the basic algorithms we have discussed here.

Understanding The Problem

Problem Description

Given an array of integers arr[], write a program to sort the array in ascending order using Quick Sort.

Example 1

Input: arr[] = [5,2,3,1]

Output: [1,2,3,5]

Explanation: Return the sorted array

Example 2

Input: arr[] = [9,-3,5,2,6,8,-6,1,3]

Output: [-6,-3,1,2,3,5,6,8,9]

Solution

As you might know for sorting or comparison-based sorting algorithm the best time complexity that is possible is NlogN. Some of the algorithms that provide this time complexity are merge sort and quicksort. You could read about the merge sort algorithm and understand how the sorting takes place and you will also get to know that the merge sort uses an auxiliary space complexity of O(N).

We will discuss quick sort which guarantees with a very high probability of time complexity of NlogN in average case but has N² in its worst case. Even though the time complexity is N² in the worst case, It is mostly used by various applications because its space complexity is O(1). This algorithm is so much efficient in practical use cases that the sort function of most of the languages uses quicksort.

Let us see how the quicksort algorithm works in practice for this array.

[35, 33, 42, 10, 14, 19, 27, 44, 26, 31]

At each iteration of quicksort, we choose the pivot element and try to bring it at its correct position. Let us see that in our implementation of quicksort, we always choose the end element of the array as a pivot and try to bring it at its correct position. What this means that we will try to bring all the elements that are smaller than the pivot element to the left side of it and all the elements greater than the pivot element to the right side of it.

The pivot element is the element that will try to be at the current position is known as the pivot element.

In the above case, in the first iteration, we choose 31 as the pivot, now 10, 14, 19, 27, 26 will go to the left of 31 and 35, 33, 42, 44 will go to the right of 31. Now you have two smaller unsorted arrays [10, 14, 19, 27, 26] 31 [35, 33, 42, 44], so we can repeat the process as the pivot element 31 is at its correct position.

Now if we go on, there will be the case when the size of the subarray becomes 1, which will be considered as the base case.

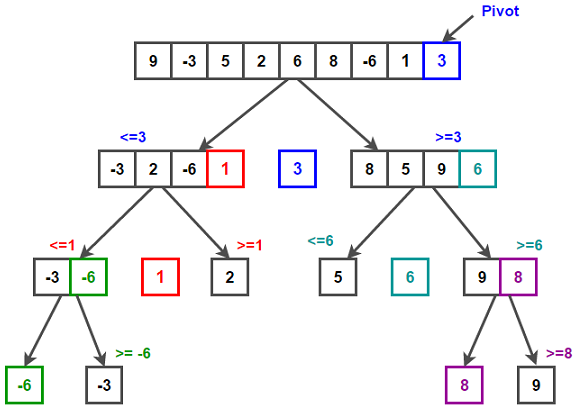

If we follow the same procedure for example 2. Then each step will work like this:

Solution Steps

- Find a

pivotitem in the array. This item is the basis for comparison for a single round. - Start a pointer (the

left pointer) at the first item in the array. - Start a pointer (the

right pointer) at the last item in the array. - While

arr[left pointer] < pivot value, move the left pointer to the right. Continue until the arr[left pointer] >= pivot value. - While

arr[right pointer] > pivot value, move the right pointer to the left. Continue until the arr[right pointer] <= pivot value. - If

arr[left pointer] <= arr[right pointer], then swap the values. - Move the

left pointerto the right by one and theright pointerto the left by one. - If the

left pointerandright pointerdoesn’t meet, go to step 1.

Pseudo Code

void quicksort(int a[], int start, int end)

{

if(start < end)

{

int pi

pi= partition(a, start, end)

quicksort(a, start, pi-1)

quicksort(a, pi+1, end)

}

}

// partition function

int partition (int arr[], int start, int end)

{

int pivot = arr[end] // selecting last element as pivot

int i = (start - 1) // index of smaller element

for (int j = start to j <= end- 1)

{

// If the current element is smaller than or equal to pivot

if (arr[j] <= pivot)

{

i = i + 1 // increment index of smaller element

swap(arr[i], arr[j])

}

}

swap(arr[i + 1], arr[high])

return (i + 1)

}

Complexity Analyisis

- Worst-case time complexity: O(n²)

- Best-case time complexity: O(n log n) (simple partition)or O(n) (three-way partition and equal keys)

- Average-case time complexity: O(n log n)

- Worst-case space complexity: O(n) auxiliary (naive)

Critical Ideas To Think

- What if

start > end? that means thearray[start::end]is an invalid array. start > end. Is this case possible? If yes, then think of such a case!- Can we choose a random pivot instead of

arr[end]? If yes, then will it affect the complexity? - Can you write the recurrence relation for the quicksort algorithm?

- Even though, quick sort worst-case complexity is O(n²), though it preferred more than merge-sort! Why?

- Is quick sort is a stable sorting algorithm? If not, how?

- For a better gist of comparison of sorting algorithms, read here.

Suggested Problems to Solve

- Find kth largest in an unsorted array.

- Quicksort in a singly linked list.

- Top K frequent elements.

- K closest points to origin.

- The painter’s partition problem

- Maximum average sum partition of an array

Please comment down below if you have a better insight in the above approach.

Happy Coding, Enjoy Algorithms!

Written by AfterAcademy Tech

Share this article and spread the knowledge

Read Similar Articles

AfterAcademy Tech

Sort List - Merge Sort

Sort a linked list using Merge Sort. This is a very famous interview problem that demonstrates the concept of recursion. This problem is quite similar to Merge Sort in Arrays.

AfterAcademy Tech

Introduction To Sorting Algorithms - Selection and Insertion sort

Sorting is a famous problem solving approach during the interview. In this blog, we are going to discuss about the insertion and selection sort algorithm.

AfterAcademy Tech

Sort a linked list using Insertion Sort - Interview Problem

Sort a linked list using insertion sort and return its head.

AfterAcademy Tech

Merge Sort

Merge sort is a divide-and-conquer algorithm based on the idea of breaking down a list into several sub-lists until each sublist consists of a single element and merging those sublists in a manner that results into a sorted list.