Analysis of Recursion in Programming

AfterAcademy Tech

•

24 Dec 2019

In this blog, we will analyze the recursive algorithm using the Recurrence Tree Method and Master theorem. We will also discuss the advantages and disadvantages of recursion.

For every recursive algorithm, we can write recurrence relation to analyse the time complexity of the algorithm.

Recurrence relation of recursive algorithms

A recurrence relation is an equation that defines a sequence where any term is defined in terms of its previous terms.

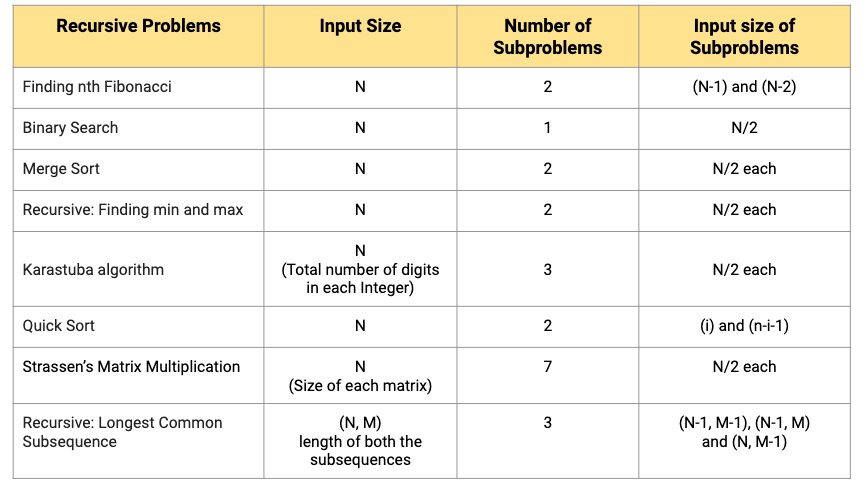

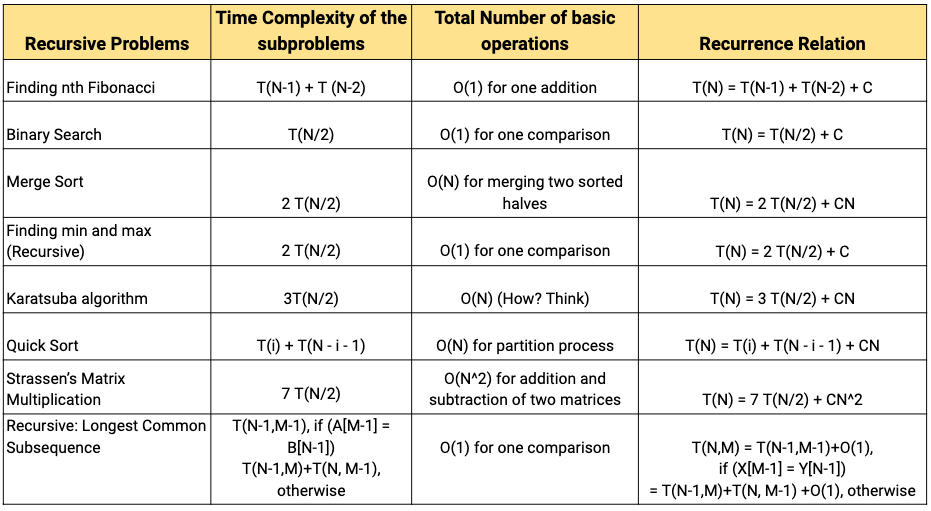

The recurrence relation for the time complexity of some problems are given below:

Fibonacci Number

T(N) = T(N-1) + T(N-2)

Base Conditions: T(0) = 0 and T(1) = 1

Binary Search

T(N) = T(N/2) + C

Base Condition: T(1) = 1

Merge Sort

T(N) = 2 T(N/2) + CN

Base Condition: T(1) = 1

Recursive Algorithm: Finding min and max in an array

T(N) = 2 T(N/2) + 2

Base Condition: T(1) = 0 and T(2) = 1

Karastuba algorithm for fast multiplication

T(N) = 3 T(N/2) + CN

Base Condition: T(0) = 1 and T(1) = 1

Quick Sort

T(N) = T(i) + T(N-i-1) + CN

The time taken by quick sort depends upon the distribution of the input array and partition strategy. T(i) and T(N-i-1) are two smaller subproblems after the partition where i is the number of elements that are smaller than the pivot. CN is the time complexity of the partition process where C is a constant. .

Worst Case: This is a case of the unbalanced partition where the partition process always picks the greatest or smallest element as a pivot(Think!).For the recurrence relation of the worst case scenario, we can put i = 0 in the above equation.

T(N) = T(0) + T(N-1) + CN

which is equivalent to

T(N) = T(N-1) + CN

Best Case: This is a case of the balanced partition where the partition process always picks the middle element as pivot. For the recurrence relation of the worst case scenario, put i = N/2 in the above equation.

T(N) = T(N/2) + T(N/2-1) + CN

which is equivalent to

T(N) = 2T(N/2) + CN

Average Case: For average case analysis, we need to consider all possible permutation of input and time taken by each permutation.

T(N) = (for i = 0 to N-1) ∑ ( T(i) + T(N-i-1) ) / N

Note: This looks mathematically complex but we can find several other intuitive ways to analyse the average case of quick sort.

Analyzing the Efficiency of Recursive Algorithms

Step 1: Identify the number of sub-problems and a parameter (or parameters) indicating an input’s size of each sub-problem (function call with smaller input size)

Step 2: Add the time complexities of the sub-problems and the total number of basic operations performed at that stage of recursion.

Note: Check whether the number of times the basic operation is executed can vary on different inputs of the same size; if it can, the worst-case, average-case, and best-case efficiencies must be investigated separately.

Step3: Set up a recurrence relation, with a correct base condition, for the number of times the basic operation is executed.

Step4: Solve the recurrence or, at least, ascertain the order of growth of its solution. There are several ways to analyse the recurrence relation but we are discussing here two popular approaches of solving recurrences:

- Method 1: Recursion Tree Method

- Method 2: Master Theorem

Method 1: Recursion Tree Method

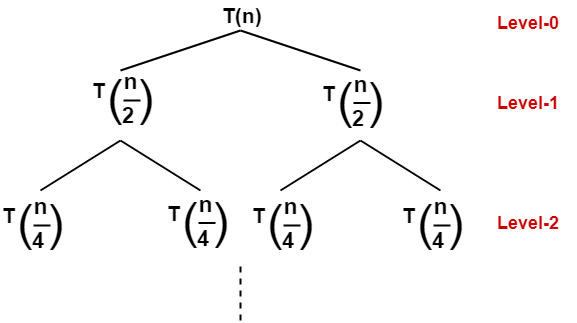

A recurrence tree is a tree where each node represents the cost of a certain recursive subproblem. We take the sum of each value of nodes to find the total complexity of the algorithm.

Steps for solving a recurrence relation

- Draw a recursion tree based on the given recurrence relation.

- Determine the number of levels, cost at each level and cost of the last level.

- Add the cost of all levels and simplify the expression.

Let us solve the given recurrence relation by Recurrence Tree Method

T(N) = 2*T(N/2) + CN

From the above recurrence relation, we can find that

- The problem of size N is divided into two sub-problems of size N/2.

- The cost of dividing a sub-problem and then combining its solution of size N is CN.

- Each time, the problem will be divided into half, until the size of the problem becomes 1.

The recursion tree for the above relation will be

Cost at each level

The cost of dividing and combining the solution of size N/2^k is N/2^k and the number of nodes at each level is 2^k.

So, the cost at each level is (N/2^k)*(N^k) = N.

Number of levels

Size of problem at level-0 : N/2^0

Size of problem at level-1 : N/2^1

Size of problem at level-2 : N/2^2

Size of problem at level-3 : N/2^3

.

.

.

.

Size of problem at level-k : N/2^k

Now, we know that the size of the problem at the last level is 1.

Let us suppose the last level be k. Then

N/2^k = 1

N = 2^k

Taking log both sides

log(N) = log(2^k)

log(N) = k*log(2)

k = log2(N)

The number of levels in the recursion tree is log2(N).

Cost of the last level

The cost at the last level where the size of the problem is 1 and the number of subproblems is N.

cost at last level = N*T(1) = O(N)

Now, let’s add up the cost at each level

= { N + N + N + ..logN times } + O(N)

= O(NlogN) + O(N)

= O(NlogN)

The time complexity of the above recurrence relation is O(N logN).

Method 2: Master theorem

Master theorem states that for a recurrence relation of form

T(N) = aT(N/b) + f(N) where a >= 1 and b > 1

If f(N) = O(N^k) and k ≥ 0, then

Case 1: T(N) = O(N^logb(a)), if k < logb(a).

Case 2: T(N) = O((N^k)*logN), if k = logb(a).

Case 3: T(N) = O(N^k), if k > logb(a)

Note: We will skip the mathematical proof, but if you are interested in knowing the maths behind this, it's a task for you to look at the proof of this theorem.

Let's analyse the time complexity using the master theorem.

Example 1

T(N) = T(N/2) + C

The above recurrence relation is of binary search. Comparing this with master theorem, we get a = 1, b = 2 and k = 0 because f(N) = C = C(N^0)

Here logb(a) = k, so we can apply case 2 of the master theorem.(Think!)

T(n) = (N⁰*log(N)) = O(logN).

Example 2

T(N) = 2*T(N/2) + CN

The above recurrence relation is of merge sort. Comparing this with master theorem,a = 2, b = 2 and f(N) = CN. Comparing left and right sides of f(N), we get k = 1.

logb(a) = log2(2) = 1 = K

So, we can apply the case 2 of the master theorem. (Think!)

=> T(N) = O(N¹*log(N)) = O(NlogN).

Example 3

T(N) = 2*T(N/2) + O(1)

The above recurrence relation is of finding min-max in an array through the divide and conquer approach. Comparing this with master theorem,a = 2, b = 2 and f(N) = 1. Comparing left and right sides of f(N), we get k = 0.

logb(a) = log2(2) = 1 > k

So, we can apply the case 1 of the master thorem (Think!)

T(N) = O(N^logb(a)) = O(N¹) = O(N)

Example 4

T(N) = 2T(N/2) + CN^2

Comparing this with master theorem, a = 2, b = 2 and f(N) = CN²

comparing left and right sides of f(n), we get k = 2.

logb(a) = log2(2) = 1 < k

So, we can apply the case 3 of the master thorem.(Think!)

T(N) = O(N^k)= O(N²)

Pros and Cons of recursive programming

Pros

- Recursion can reduce time complexity.

- Recursion adds clarity and reduces the time needed to write and debug code.

- Recursion can lead to more readable and efficient algorithm descriptions.

- Recursion is a useful way of defining things that have a repeated similar structural form like tree traversal.

Cons

- Recursion uses more memory like using run-time stack.

- Recursion can be slow.

- If recursion is too deep, then there is a danger of running out of space on the stack and ultimately program crashes.

Critical Concepts to explore in Recursion

- Different Types of recursion and properties

- Iteration vs Recursion comparison

- Why Stack Overflow error occurs in recursion?

- How to convert recursive code into iterative code and vice versa?

Suggested Problems to solve in Recursion

- Find an element in Bitonic array

- Search for a Range in a sorted array

- Missing Number

- Search a 2-D Matrix

- Median in row wise sorted matrix

- Find minimum element in sorted and rotated array

- Recursive Insertion Sort

- Median of two sorted array of same size

- Inversion count in an array

- Longest Common Prefix

Happy coding! Enjoy Algorithms.

AfterAcademy Data Structure And Algorithms Online Course — Admissions Open

Written by AfterAcademy Tech

Share this article and spread the knowledge

Read Similar Articles

AfterAcademy Tech

What is recursion in programming?

Using recursion, certain problems can be solved quite easily. Several algorithms design techniques and data structures are based on recursive thinking.

AfterAcademy Tech

Recursive Insertion Sort

Write a program for the recursive implementation of Insertion Sort. Insertion Sort is used to sort a given array. This problem will sharpen your recursion skills.

AfterAcademy Tech

What is the difference between iteration and recursion?

Iteration and Recursion are programming methodologies with similar objective and thus one might seem trivial compared to another, but that's not the case. We will be discussing the important differences between iteration and recursion and how both are useful.

AfterAcademy Tech

Reverse a Stack Using Recursion

Given a stack of integers st, write a program to reverse the stack using recursion. This problem will clear the concept of recursion.